"ChatGPT is Lazier."

How to Evaluate GPT-4’s Performance Without Bias.

M

12/12/2023

A Mashable article by Cecily Mauran, published on December 11, 2023, claims that OpenAI has admitted that ChatGPT is "lazier."

Upon reviewing the actual X post, ChatGPT (@ChatGPTapp) stated:

However, did OpenAI actually admit to GPT-4 becoming lazier? I don't think so. And to show you what I mean, let's take this text and analyze it. The message does not explicitly admit that GPT-4 is "lazier." It simply acknowledges user feedback about GPT-4's performance but does not directly use the term "lazier." To put it this way, this message only says, "We heard you. You think ChatGPT-4 is lazier. We'll look into it."

Let's Be Rational People Here for a Moment

For us to prove GPT-4 is lazier, we need statistical proof. Yes, I hate to be the lame guy in the room who is not carrying a pitchfork or torch. Still, we need evidence before we can assume a model is lazier (or an assumption about pretty much anything in life). Here is a list of proofs that would come in handy.

Word and Character Count Analysis: Compare the average word and character count of responses generated by GPT-4 over time. A noticeable increase in brevity or a decrease in complexity could suggest a decline in effort.

Repetition Frequency: Analyze how often GPT-4 repeats phrases, sentences, or ideas within its responses. An increase in repetition may show a need for more creativity or thoroughness.

Diversity of Vocabulary: Measure the diversity of vocabulary used by GPT-4 in its responses. A decrease in unique words and phrases might reduce linguistic richness.

Coherence and Contextual Understanding: Assess the model's ability to maintain coherence and context within more extended responses. An increase in incoherent or off-topic content could suggest a decline in focus.

Grammar and Spelling Errors: Count the grammar and spelling errors in GPT-4's responses. An increase in errors may indicate a decrease in proofreading and quality control.

Response Length vs. Prompt Complexity: Analyze whether GPT-4 provides shorter responses to complex prompts that historically required more extensive explanations.

Comparison with Earlier Versions: Compare GPT-4's performance with earlier model versions (e.g., GPT-3) to identify any noticeable decline in response quality.

User Satisfaction Surveys: Conduct user surveys to gather feedback on the perceived quality of GPT-4's responses and whether users find them "lazier" than previous versions.

Time Efficiency: Measure the time it takes for GPT-4 to generate responses. A decrease in response time might indicate less effort in developing thoughtful answers.

Engagement Metrics: Monitor user engagement metrics, such as session duration or interaction frequency. A decrease in user engagement could be indicative of declining quality.

To prove that GPT-4 is "lazier," it would be essential to gather and analyze these data types over time to establish a statistical trend that supports the claim.

What Term is Used?

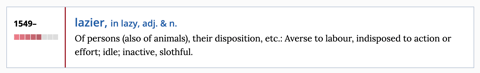

The English Oxford Dictionary (OED) defines laziness as having many meanings. If we take a closer look at these meanings, they mean different things depending on the context.

T